All testing focuses on discovering and eliminating defects or variances from what is expected.

Testers need to identify following two types of defects:

A) Variance from Specifications – A defect from the perspective of the builder of the product.

B) Variance from what is Desired – A defect from a user (or customer) perspective.

Typical software system defects are as under:

1) IT improperly interprets requirements: IT staff misinterprets what the user wants, but correctly implements what the IT people believe is wanted.

2) Users specify the wrong requirements: The specifications given to IT are erroneous.

3) Requirements are incorrectly recorded: IT fails to record the specifications properly.

4) Design specifications are incorrect: The application system design does not achieve the system requirements, but the design as specified is implemented correctly.

5) Program specifications are incorrect: The design specifications are incorrectly interpreted, making the program specifications inaccurate; however, it is possible to properly code the program to achieve the specifications.

6) Errors in program coding: The program is not coded according to the program specifications.

7) Data entry errors: Data entry staff incorrectly enters information into your computers.

8) Testing errors: Tests either falsely detect an error or fail to detect one.

9) Mistakes in error correction: Your implementation team makes errors in implementing your solutions.

10) The corrected condition causes another defect: In the process of correcting a defect, the correction process itself injects additional defects into the application system.

Tags: Software Testing, Software Quality, Software system defects, quality Assurance, software defects

Wednesday, September 9, 2009

What are software design & data defects?

Let us firstly see what is a defect?

The problems, resulting in the software applications automatically initiating uneconomical or otherwise incorrect actions, can be broadly categorized as software design defects and data defects.

Software Design Defects:

Software design defects that most commonly cause bad decisions by automated decision making applications include:

1) Designing software with incomplete or erroneous decision-making criteria. Actions have been incorrect because the decision-making logic omitted factors that should have been included. In other cases, decision-making criteria included in the software were appropriate, either at the time of design or later, because of changed circumstances.

2) Failing to program the software as intended by the customer (user), or designer, resulting in logic errors often referred to as programming errors.

3) Omitting needed edit checks for determining completeness of output data. Critical data elements have been left blank on many input documents, and because no checks were included, the applications processed the transactions with incomplete data.

Data Defects:

Input data is frequently a problem. Since much of this data is an integral part of the decision making process, its poor quality can adversely affect the computer-directed actions.

Common problems are:

1) Incomplete data used by automated decision-making applications. Some input documents prepared by people omitted entries in data elements that were critical to the application but were processed anyway. The documents were not rejected when incomplete data was being used. In other instances, data needed by the application that should have become part of IT files was not put into the system.

2) Incorrect data used in automated decision-making application processing. People have often unintentionally introduced incorrect data into the IT system.

3) Obsolete data used in automated decision-making application processing. Data in the IT files became obsolete due to new circumstances. The new data may have been available but was not put into the computer.

Tags: Software Testing, Software Quality, Software design defects, software data defects, quality Assurance, software defects

The problems, resulting in the software applications automatically initiating uneconomical or otherwise incorrect actions, can be broadly categorized as software design defects and data defects.

Software Design Defects:

Software design defects that most commonly cause bad decisions by automated decision making applications include:

1) Designing software with incomplete or erroneous decision-making criteria. Actions have been incorrect because the decision-making logic omitted factors that should have been included. In other cases, decision-making criteria included in the software were appropriate, either at the time of design or later, because of changed circumstances.

2) Failing to program the software as intended by the customer (user), or designer, resulting in logic errors often referred to as programming errors.

3) Omitting needed edit checks for determining completeness of output data. Critical data elements have been left blank on many input documents, and because no checks were included, the applications processed the transactions with incomplete data.

Data Defects:

Input data is frequently a problem. Since much of this data is an integral part of the decision making process, its poor quality can adversely affect the computer-directed actions.

Common problems are:

1) Incomplete data used by automated decision-making applications. Some input documents prepared by people omitted entries in data elements that were critical to the application but were processed anyway. The documents were not rejected when incomplete data was being used. In other instances, data needed by the application that should have become part of IT files was not put into the system.

2) Incorrect data used in automated decision-making application processing. People have often unintentionally introduced incorrect data into the IT system.

3) Obsolete data used in automated decision-making application processing. Data in the IT files became obsolete due to new circumstances. The new data may have been available but was not put into the computer.

Tags: Software Testing, Software Quality, Software design defects, software data defects, quality Assurance, software defects

What is statistical process control in software development process?

Let us firstly see what is a defect?

A defect is an undesirable state. There are two types of defects: process and procedure. For example, if a Test Plan Standard is not followed, it would be a process defect. However, if the Test Plan did not contain a Statement of Usability as specified in the Requirements documentation it would be a product defect.

The term quality is used to define a desirable state. A defect is defined as the lack of that desirable state. In order to fully understand what a defect is we must understand quality.

What do we mean by Software Process Defects?

Ideally, the software development process should produce the same results each time the process is executed. For example, if we follow a process that produced one function-point-of-logic in 100 person hours, we would expect that the next time we followed that process, we would again produce one function-point-of-logic in 100 hours. However, if we follow the process the second time and it took 110 hours to produce one function-point-of-logic, we would state that there is “variability” in the software development process. Variability is the “enemy” of quality – the concepts behind maturing a software development process is to reduce variability.

The concept of measuring and reducing variability is commonly called statistical process control (SPC).

To understand SPC we need to first understand the following:

1) What constitutes an in control process

2) What constitutes an out of control process

3) What are some of the steps necessary to reduce variability within a process

Testers need to understand process variability, because the more variance in the process the greater the need for software testing. Following is a brief tutorial on processes and process variability.

Tags: Software Testing, Software Quality, Statistical Process Control, Quality Assurance, SPC

A defect is an undesirable state. There are two types of defects: process and procedure. For example, if a Test Plan Standard is not followed, it would be a process defect. However, if the Test Plan did not contain a Statement of Usability as specified in the Requirements documentation it would be a product defect.

The term quality is used to define a desirable state. A defect is defined as the lack of that desirable state. In order to fully understand what a defect is we must understand quality.

What do we mean by Software Process Defects?

Ideally, the software development process should produce the same results each time the process is executed. For example, if we follow a process that produced one function-point-of-logic in 100 person hours, we would expect that the next time we followed that process, we would again produce one function-point-of-logic in 100 hours. However, if we follow the process the second time and it took 110 hours to produce one function-point-of-logic, we would state that there is “variability” in the software development process. Variability is the “enemy” of quality – the concepts behind maturing a software development process is to reduce variability.

The concept of measuring and reducing variability is commonly called statistical process control (SPC).

To understand SPC we need to first understand the following:

1) What constitutes an in control process

2) What constitutes an out of control process

3) What are some of the steps necessary to reduce variability within a process

Testers need to understand process variability, because the more variance in the process the greater the need for software testing. Following is a brief tutorial on processes and process variability.

Tags: Software Testing, Software Quality, Statistical Process Control, Quality Assurance, SPC

Why should we test Software & who should do that?

Let us firstly see why we test software?

The simple answer as to why we test software is that developers are unable to build defect-free software. If the development processes were perfect, meaning no defects were produced, testing would not be necessary.

Let’s compare the manufacturing process of producing boxes of cereal to the process of making software. We find that, as is the case for most food manufacturing companies, testing each box of cereal produced is unnecessary. Making software is a significantly different process than making a box of cereal however. Cereal manufacturers may produce 50,000 identical boxes of cereal a day, while each software process is unique. This uniqueness introduces defects, and thus making testing software necessary.

Now let us see why developers are not Good Testers:

Testing by the individual who developed the work has not proven to be a substitute to building and following a detailed test plan.

The disadvantages of a person checking their own work using their own documentation are as under:

1) Misunderstandings will not be detected, because the checker will assume that what the other individual heard from him was correct.

2) Improper use of the development process may not be detected because the individual may not understand the process.

3) The individual may be “blinded” into accepting erroneous system specifications and coding because he falls into the same trap during testing that led to the introduction of the defect in the first place.

4) Information services people are optimistic in their ability to do defect-free work and thus sometimes underestimate the need for extensive testing.

Without a formal division between development and test, an individual may be tempted to improve the system structure and documentation, rather than allocate that time and effort to the test.

Tags: Quality Control, Quality Assurance, Software Testing

The simple answer as to why we test software is that developers are unable to build defect-free software. If the development processes were perfect, meaning no defects were produced, testing would not be necessary.

Let’s compare the manufacturing process of producing boxes of cereal to the process of making software. We find that, as is the case for most food manufacturing companies, testing each box of cereal produced is unnecessary. Making software is a significantly different process than making a box of cereal however. Cereal manufacturers may produce 50,000 identical boxes of cereal a day, while each software process is unique. This uniqueness introduces defects, and thus making testing software necessary.

Now let us see why developers are not Good Testers:

Testing by the individual who developed the work has not proven to be a substitute to building and following a detailed test plan.

The disadvantages of a person checking their own work using their own documentation are as under:

1) Misunderstandings will not be detected, because the checker will assume that what the other individual heard from him was correct.

2) Improper use of the development process may not be detected because the individual may not understand the process.

3) The individual may be “blinded” into accepting erroneous system specifications and coding because he falls into the same trap during testing that led to the introduction of the defect in the first place.

4) Information services people are optimistic in their ability to do defect-free work and thus sometimes underestimate the need for extensive testing.

Without a formal division between development and test, an individual may be tempted to improve the system structure and documentation, rather than allocate that time and effort to the test.

Tags: Quality Control, Quality Assurance, Software Testing

Expectations of which customers should we satisfy & how much?

Let us firstly define excellence:

The Random House College Dictionary defines excellence as "superiority; eminence.” Excellence, then, is a measure or degree of quality. These definitions of quality and excellence are important because it is a starting point for any management team contemplating the implementation of a quality policy. They must agree on a definition of quality and the degree of excellence they want to achieve.

The common thread that runs through today's quality improvement efforts is the focus on the customer and, more importantly, customer satisfaction. The customer is the most important person in any process.

How many types of customers are there?

Customers may be either internal or external. The question of customer satisfaction (whether that customer is located in the next workstation, building, or country) is the essence of a quality product. Identifying customers' needs in the areas of what, when, why, and how are an essential part of process evaluation and may be accomplished only through communication.

The internal customer is the person or group that receives the results (outputs) of any individual's work. The outputs may include a product, a report, a directive, a communication, or a service. In fact, anything that is passed between people or groups. Customers include peers, subordinates, supervisors, and other units within the organization. Their expectations must also be known and exceeded to achieve quality.

External customers are those using the products or services provided by the organization. Organizations need to identify and understand their customers. The challenge is to understand and exceed their expectations.

An organization must focus on both internal and external customers and be dedicated to exceeding customer expectations.

Tags: Quality Control, Quality Assurance, Software Testing, Customer expectations, Excellence

The Random House College Dictionary defines excellence as "superiority; eminence.” Excellence, then, is a measure or degree of quality. These definitions of quality and excellence are important because it is a starting point for any management team contemplating the implementation of a quality policy. They must agree on a definition of quality and the degree of excellence they want to achieve.

The common thread that runs through today's quality improvement efforts is the focus on the customer and, more importantly, customer satisfaction. The customer is the most important person in any process.

How many types of customers are there?

Customers may be either internal or external. The question of customer satisfaction (whether that customer is located in the next workstation, building, or country) is the essence of a quality product. Identifying customers' needs in the areas of what, when, why, and how are an essential part of process evaluation and may be accomplished only through communication.

The internal customer is the person or group that receives the results (outputs) of any individual's work. The outputs may include a product, a report, a directive, a communication, or a service. In fact, anything that is passed between people or groups. Customers include peers, subordinates, supervisors, and other units within the organization. Their expectations must also be known and exceeded to achieve quality.

External customers are those using the products or services provided by the organization. Organizations need to identify and understand their customers. The challenge is to understand and exceed their expectations.

An organization must focus on both internal and external customers and be dedicated to exceeding customer expectations.

Tags: Quality Control, Quality Assurance, Software Testing, Customer expectations, Excellence

Quality Gaps from the view point of Customer and Producer

Let us firstly define Quality:

Quality is frequently defined as meeting the customer's requirements the first time and every time. Quality is also defined as conformance to a set of customer requirements that, if met, result in a product that is fit for its intended use.

Quality is much more than the absence of defects, which allows us to meet customer expectations. Quality requires controlled process improvement, allowing loyalty in organizations. Quality can only be achieved by the continuous improvement of all systems and processes in the organization, not only the production of products and services but also the design, development, service, purchasing, administration, and, indeed, all aspects of the transaction with the customer. All must work together toward the same end.

Quality can only be seen through the eyes of the customers. An understanding of the customer's expectations (effectiveness) is the first step; then exceeding those expectations (efficiency) is required. Communications will be the key. Exceeding customer expectations assures meeting all the definitions of quality.

Now let us see what is Quality Software?

There are two important definitions of quality software:

1) The producer’s view of quality software means meeting requirements.

2) Customer’s/User’s of software view of quality software means fit for use.

These two definitions are not inconsistent. Meeting requirements is the producer’s definition of quality; it means that the person building the software builds it in accordance with requirements. The fit for use definition is a user’s definition of software quality; it means that the software developed by the producer meets the user’s need regardless of the software requirements.

The Two Software Quality Gaps:

In most IT groups, there are two gaps as illustrated in Figure 1-6, the different views of software quality between the customer and the producer.

The first gap is the producer gap. It is the gap between what is specified to be delivered, meaning the documented requirements and internal IT standards, and what is actually delivered. The second gap is between what the producer actually delivers compared to what the customer expects.

To close the customer’s gap, the IT quality function must understand the true needs of the user. This can be done by the following actions:

1) Customer surveys

2) JAD (joint application development) sessions – the producer and user come together and negotiate and agree upon requirements

3) More user involvement while building information products

It is accomplished through changing the processes to close the user gap so that there is consistency and producing software and services that the user needs. Software testing professionals can participate in closing these “quality” gaps.

Tags: Quality Control, Quality Assurance, Software Testing, Software Quality Gaps

Quality is frequently defined as meeting the customer's requirements the first time and every time. Quality is also defined as conformance to a set of customer requirements that, if met, result in a product that is fit for its intended use.

Quality is much more than the absence of defects, which allows us to meet customer expectations. Quality requires controlled process improvement, allowing loyalty in organizations. Quality can only be achieved by the continuous improvement of all systems and processes in the organization, not only the production of products and services but also the design, development, service, purchasing, administration, and, indeed, all aspects of the transaction with the customer. All must work together toward the same end.

Quality can only be seen through the eyes of the customers. An understanding of the customer's expectations (effectiveness) is the first step; then exceeding those expectations (efficiency) is required. Communications will be the key. Exceeding customer expectations assures meeting all the definitions of quality.

Now let us see what is Quality Software?

There are two important definitions of quality software:

1) The producer’s view of quality software means meeting requirements.

2) Customer’s/User’s of software view of quality software means fit for use.

These two definitions are not inconsistent. Meeting requirements is the producer’s definition of quality; it means that the person building the software builds it in accordance with requirements. The fit for use definition is a user’s definition of software quality; it means that the software developed by the producer meets the user’s need regardless of the software requirements.

The Two Software Quality Gaps:

In most IT groups, there are two gaps as illustrated in Figure 1-6, the different views of software quality between the customer and the producer.

The first gap is the producer gap. It is the gap between what is specified to be delivered, meaning the documented requirements and internal IT standards, and what is actually delivered. The second gap is between what the producer actually delivers compared to what the customer expects.

To close the customer’s gap, the IT quality function must understand the true needs of the user. This can be done by the following actions:

1) Customer surveys

2) JAD (joint application development) sessions – the producer and user come together and negotiate and agree upon requirements

3) More user involvement while building information products

It is accomplished through changing the processes to close the user gap so that there is consistency and producing software and services that the user needs. Software testing professionals can participate in closing these “quality” gaps.

Tags: Quality Control, Quality Assurance, Software Testing, Software Quality Gaps

What are the different perceptions of Quality?

The definition of “Quality” is a factor in determining the scope of software testing. Although there are multiple quality philosophies documented, it is important to note that most contains the same core components:

1) Quality is based upon customer satisfaction

2) Your organization must define quality before it can be achieved

3) Management must lead the organization through any improvement efforts

There are five perspectives of quality: Each of these perspectives must be considered as important to the customer.

1. Transcendent – I know it when I see it

2. Product-Based – Possesses desired features

3. User-Based – Fitness for use

4. Development & Manufacturing-Based – Conforms to requirements

5. Value-Based – At an acceptable cost

Peter R. Scholtes introduces the contrast between effectiveness (doing the right things) and efficiency (doing things right). Quality organizations must be both effective and efficient.

Patrick Townsend examines quality in fact and quality in perception as explained by four different views given below.

Quality in fact is usually the supplier's point of view, while quality in perception is the customer's. Any difference between the former and the latter can cause problems between the two.

1st View:

Quality in Fact: Doing the right thing.

Quality in Perception: Delivering the right product.

2nd View:

Quality in Fact: Doing it the right way.

Quality in Perception: Satisfying our customer’s needs.

3rd View:

Quality in Fact: Doing it right the first time.

Quality in Perception: Meeting the customer’s expectations.

4th View:

Quality in Fact: Doing it on time.

Quality in Perception: Treating every customer with integrity, courtesy, and respect.

An organization’s quality policy must define and view quality from their customer's perspectives. If there are conflicts, they must be resolved.

Tags: Quality Control, Quality Assurance, Software Testing, Software Quality Perceptions

1) Quality is based upon customer satisfaction

2) Your organization must define quality before it can be achieved

3) Management must lead the organization through any improvement efforts

There are five perspectives of quality: Each of these perspectives must be considered as important to the customer.

1. Transcendent – I know it when I see it

2. Product-Based – Possesses desired features

3. User-Based – Fitness for use

4. Development & Manufacturing-Based – Conforms to requirements

5. Value-Based – At an acceptable cost

Peter R. Scholtes introduces the contrast between effectiveness (doing the right things) and efficiency (doing things right). Quality organizations must be both effective and efficient.

Patrick Townsend examines quality in fact and quality in perception as explained by four different views given below.

Quality in fact is usually the supplier's point of view, while quality in perception is the customer's. Any difference between the former and the latter can cause problems between the two.

1st View:

Quality in Fact: Doing the right thing.

Quality in Perception: Delivering the right product.

2nd View:

Quality in Fact: Doing it the right way.

Quality in Perception: Satisfying our customer’s needs.

3rd View:

Quality in Fact: Doing it right the first time.

Quality in Perception: Meeting the customer’s expectations.

4th View:

Quality in Fact: Doing it on time.

Quality in Perception: Treating every customer with integrity, courtesy, and respect.

An organization’s quality policy must define and view quality from their customer's perspectives. If there are conflicts, they must be resolved.

Tags: Quality Control, Quality Assurance, Software Testing, Software Quality Perceptions

What are the Software Quality Factors?

In defining the scope of testing, the risk factors become the basis or objective of testing. The objectives for many tests are associated with testing software quality factors. The software quality factors are attributes of the software that, if they are wanted and not present, pose a risk to the success of the software, and thus constitute a business risk. For example, if the software is not easy to use, the resulting processing may be incorrect. The definition of the software quality factors and determining their priority enables the test process to be logically constructed.

When software quality factors are considered in the development of the test strategy, results from testing successfully meet your objectives.

The primary purpose of applying software quality factors in a software development program is to improve the quality of the software product. Rather than simply measuring, the concepts are based on achieving a positive influence on the product, to improve its development.

How can we Identify Important Software Quality Factors?

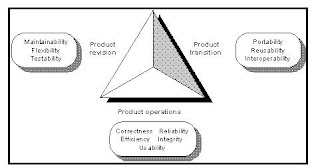

Following Figure describes the Software Quality Factors defined by McCall.

Brief explanation of Eleven Important Software Quality Factors:

1) Correctness: Extent to which a program satisfies its specifications and fulfills the user's mission objectives.

2) Reliability: Extent to which a program can be expected to perform its intended function with required precision

3) Efficiency: The amount of computing resources and code required by a program to perform a function.

4) Integrity: Extent to which access to software or data by unauthorized persons can be controlled.

5) Usability: Effort required learning, operating, preparing input, and interpreting output of a program

6) Maintainability: Effort required locating and fixing an error in an operational program.

7) Testability: Effort required testing a program to ensure that it performs its intended function.

8) Flexibility: Effort required modifying an operational program.

9) Portability: Effort required to transfer software from one configuration to another.

10) Reusability: Extent to which a program can be used in other applications - related to the packaging and scope of the functions that programs perform.

11) Interoperability: Effort required to couple one system with another.

Tags: Quality Control, Quality Assurance, Software Testing, Software Quality Factors

When software quality factors are considered in the development of the test strategy, results from testing successfully meet your objectives.

The primary purpose of applying software quality factors in a software development program is to improve the quality of the software product. Rather than simply measuring, the concepts are based on achieving a positive influence on the product, to improve its development.

How can we Identify Important Software Quality Factors?

Following Figure describes the Software Quality Factors defined by McCall.

Brief explanation of Eleven Important Software Quality Factors:

1) Correctness: Extent to which a program satisfies its specifications and fulfills the user's mission objectives.

2) Reliability: Extent to which a program can be expected to perform its intended function with required precision

3) Efficiency: The amount of computing resources and code required by a program to perform a function.

4) Integrity: Extent to which access to software or data by unauthorized persons can be controlled.

5) Usability: Effort required learning, operating, preparing input, and interpreting output of a program

6) Maintainability: Effort required locating and fixing an error in an operational program.

7) Testability: Effort required testing a program to ensure that it performs its intended function.

8) Flexibility: Effort required modifying an operational program.

9) Portability: Effort required to transfer software from one configuration to another.

10) Reusability: Extent to which a program can be used in other applications - related to the packaging and scope of the functions that programs perform.

11) Interoperability: Effort required to couple one system with another.

Tags: Quality Control, Quality Assurance, Software Testing, Software Quality Factors

What is the Cost of Quality?

When calculating the total costs associated with the development of a new application or system, three cost components must be considered. The Cost of Quality, as shown in following figure, is all the costs that occur beyond the cost of producing the product “right the first time.” Cost of Quality is a term used to quantify the total cost of prevention and appraisal, and costs associated with the production of software.

The Cost of Quality includes the additional costs associated with assuring that the product delivered meets the quality goals established for the product. This cost component is called the Cost of Quality, and includes all costs associated with the prevention, identification, and correction of product defects.

The three categories of costs associated with producing quality products are:

1) Prevention Costs:

Money required to prevent errors and to do the job right the first time. These normally require up-front costs for benefits that will be derived months or even years later. This category includes money spent on establishing methods and procedures, training workers, acquiring tools, and planning for quality. Prevention money is all spent before the product is actually built.

2) Appraisal Costs:

Money spent to review completed products against requirements. Appraisal includes the cost of inspections, testing, and reviews. This money is spent after the product is built but before it is shipped to the user or moved into production.

3) Failure Costs:

All costs associated with defective products that have been delivered to the user or moved into production. Some failure costs involve repairing products to make them meet requirements. Others are costs generated by failures such as the cost of operating faulty products, damage incurred by using them, and the costs associated with operating a Help Desk.

The Cost of Quality will vary from one organization to the next. The majority of costs associated with the Cost of Quality are associated with the identification and correction of defects. To minimize production costs, the project team must focus on defect prevention. The goal is to optimize the production process to the extent that rework is eliminated and inspection is built into the production process. The IT quality assurance group must identify the costs within these three categories, quantify them, and then develop programs to minimize the totality of these three costs. Applying the concepts of continuous testing to the systems development process can reduce the cost of quality.

Tags: Quality Control, Quality Assurance, Software Testing, Cost of Quality

The Cost of Quality includes the additional costs associated with assuring that the product delivered meets the quality goals established for the product. This cost component is called the Cost of Quality, and includes all costs associated with the prevention, identification, and correction of product defects.

The three categories of costs associated with producing quality products are:

1) Prevention Costs:

Money required to prevent errors and to do the job right the first time. These normally require up-front costs for benefits that will be derived months or even years later. This category includes money spent on establishing methods and procedures, training workers, acquiring tools, and planning for quality. Prevention money is all spent before the product is actually built.

2) Appraisal Costs:

Money spent to review completed products against requirements. Appraisal includes the cost of inspections, testing, and reviews. This money is spent after the product is built but before it is shipped to the user or moved into production.

3) Failure Costs:

All costs associated with defective products that have been delivered to the user or moved into production. Some failure costs involve repairing products to make them meet requirements. Others are costs generated by failures such as the cost of operating faulty products, damage incurred by using them, and the costs associated with operating a Help Desk.

The Cost of Quality will vary from one organization to the next. The majority of costs associated with the Cost of Quality are associated with the identification and correction of defects. To minimize production costs, the project team must focus on defect prevention. The goal is to optimize the production process to the extent that rework is eliminated and inspection is built into the production process. The IT quality assurance group must identify the costs within these three categories, quantify them, and then develop programs to minimize the totality of these three costs. Applying the concepts of continuous testing to the systems development process can reduce the cost of quality.

Tags: Quality Control, Quality Assurance, Software Testing, Cost of Quality

Monday, June 8, 2009

Differentiation between Quality Control & Quality Assurance as defined by Industry Experts

Many industry experts have concluded following statements to differentiate quality control from quality assurance:

1) Quality control relates to a specific product or service.

2) Quality control verifies whether specific attribute(s) are in, or are not in, a specific product or service.

3) Quality control identifies defects for the primary purpose of correcting defects.

4) Quality control is the responsibility of the team/worker.

5) Quality control is concerned with a specific product.

6) Quality assurance helps establish processes.

7) Quality assurance sets up measurement programs to evaluate processes.

8) Quality assurance identifies weaknesses in processes and improves them.

9) Quality assurance is a management responsibility, frequently performed by a staff function.

10) Quality assurance is concerned with all of the products that will ever be produced by a process.

11) Quality assurance is sometimes called quality control over quality control because it evaluates whether quality control is working.

12) Quality assurance personnel should never perform quality control unless it is to validate quality control.

Tags: Quality Control, Quality Assurance, Software Testing

1) Quality control relates to a specific product or service.

2) Quality control verifies whether specific attribute(s) are in, or are not in, a specific product or service.

3) Quality control identifies defects for the primary purpose of correcting defects.

4) Quality control is the responsibility of the team/worker.

5) Quality control is concerned with a specific product.

6) Quality assurance helps establish processes.

7) Quality assurance sets up measurement programs to evaluate processes.

8) Quality assurance identifies weaknesses in processes and improves them.

9) Quality assurance is a management responsibility, frequently performed by a staff function.

10) Quality assurance is concerned with all of the products that will ever be produced by a process.

11) Quality assurance is sometimes called quality control over quality control because it evaluates whether quality control is working.

12) Quality assurance personnel should never perform quality control unless it is to validate quality control.

Tags: Quality Control, Quality Assurance, Software Testing

Understand the difference between quality control and quality assurance

There is often confusion in the IT industry regarding the difference between quality control and quality assurance. Many “quality assurance” groups, in fact, practice quality control. Quality methods can be segmented into two categories: preventive methods and detective methods. This distinction serves as the mechanism to distinguish quality assurance activities from quality control activities. This discussion explains the critical difference between control and assurance, and how to recognize a control practice from an assurance practice.

Quality has following two working definitions:

1) Definition from Producer’s Viewpoint: The quality of the product meets the requirements.

2) Definition from Customer’s Viewpoint: The quality of the product is “fit for use” or meets the customer’s needs.

There are many “products” produced from the software development process in addition to the software itself, including requirements, design documents, data models, GUI screens,programs, and so on. To ensure that these products meet both requirements and user needs, both quality assurance and quality control are necessary.

Let us understand Quality Assurance:

Quality assurance is a planned and systematic set of activities necessary to provide adequate confidence that products and services will conform to specified requirements and meet user needs. Quality assurance is a staff function, responsible for implementing the quality policy defined through the development and continuous improvement of software development processes.

Quality assurance is an activity that establishes and evaluates the processes that produce products. If there is no need for process, there is no role for quality assurance. For example, quality assurance activities in an IT environment would determine the need for, acquire, or help install the following:

1) System development methodologies

2) Estimation processes

3) System maintenance processes

4) Requirements definition processes

5) Testing processes and standards

Once installed, quality assurance would measure these processes to identify weaknesses, and then correct those weaknesses to continually improve the process.

Now Let us understand Quality Control:

Quality control is the process by which product quality is compared with applicable standards, and the action taken when nonconformance is detected. Quality control is a line function, and the work is done within a process to ensure that the work product conforms to standards and requirements.

Quality control activities focus on identifying defects in the actual products produced. These activities begin at the start of the software development process with reviews of requirements, and continue until all application testing is complete.

It is possible to have quality control without quality assurance. For example, a test team may be in place to conduct system testing at the end of development, regardless of whether that system is produced using a software development methodology.

Tags: Quality Control, Quality Assurance, Software Testing

Quality has following two working definitions:

1) Definition from Producer’s Viewpoint: The quality of the product meets the requirements.

2) Definition from Customer’s Viewpoint: The quality of the product is “fit for use” or meets the customer’s needs.

There are many “products” produced from the software development process in addition to the software itself, including requirements, design documents, data models, GUI screens,programs, and so on. To ensure that these products meet both requirements and user needs, both quality assurance and quality control are necessary.

Let us understand Quality Assurance:

Quality assurance is a planned and systematic set of activities necessary to provide adequate confidence that products and services will conform to specified requirements and meet user needs. Quality assurance is a staff function, responsible for implementing the quality policy defined through the development and continuous improvement of software development processes.

Quality assurance is an activity that establishes and evaluates the processes that produce products. If there is no need for process, there is no role for quality assurance. For example, quality assurance activities in an IT environment would determine the need for, acquire, or help install the following:

1) System development methodologies

2) Estimation processes

3) System maintenance processes

4) Requirements definition processes

5) Testing processes and standards

Once installed, quality assurance would measure these processes to identify weaknesses, and then correct those weaknesses to continually improve the process.

Now Let us understand Quality Control:

Quality control is the process by which product quality is compared with applicable standards, and the action taken when nonconformance is detected. Quality control is a line function, and the work is done within a process to ensure that the work product conforms to standards and requirements.

Quality control activities focus on identifying defects in the actual products produced. These activities begin at the start of the software development process with reviews of requirements, and continue until all application testing is complete.

It is possible to have quality control without quality assurance. For example, a test team may be in place to conduct system testing at the end of development, regardless of whether that system is produced using a software development methodology.

Tags: Quality Control, Quality Assurance, Software Testing

Sunday, June 7, 2009

What is the difference between Acceptance Testing and System Testing?

Acceptance testing is performed by user personnel and may include assistance by software testers. System testing is performed by developers and / or software testers. The objective of both types of testing is to assure that when the software is complete it will be acceptable to the user.

System test should be performed before acceptance testing. There is a logical sequence for testing, and an important reason for the logical steps of the different levels of testing. Unless each level of testing fulfills its objective, the following level of testing will have to compensate for weaknesses in testing at the previous level.

In most organization units, integration and system testing will focus on determining whether or not the software specifications have been implemented as specified. In conducting testing to meet this objective it is unimportant whether or not the software specifications are those needed by the user. The specifications should be the agreed upon specifications for the software system.

The system specifications tend to focus on the software specifications. They rarely address the processing concerns over input to the software, nor do they address the concerns over the ability of user personnel to effectively use the system in performing their day-to-day business activities.

Acceptance testing should focus on input processing, use of the software in the user organization, and whether or not the specifications meet the true processing needs of the user. Sometimes these user needs are not included in the specifications; sometimes these user needs are incorrectly specified in the software specifications; and sometimes the user was unaware that without certain attributes of the system, the system was not acceptable to the user. Examples include users not specifying the skill level of the people who will be using the system; processing may be specified but turnaround time not specified, and the user may not know that they have to specify the maintainability attributes of the software.

Effective software testers will focus on all three reasons why the software specified may not meet the user’s true needs. For example they may recommend developmental reviews with users involved. Testers may ask users if the quality factors are important to them in the operational software. Testers may work with users to define acceptance criteria early in a development process so that the developers are aware and can address those acceptance criteria.

Tags: User Acceptance Testing, Software Testing, Quality Assurance

System test should be performed before acceptance testing. There is a logical sequence for testing, and an important reason for the logical steps of the different levels of testing. Unless each level of testing fulfills its objective, the following level of testing will have to compensate for weaknesses in testing at the previous level.

In most organization units, integration and system testing will focus on determining whether or not the software specifications have been implemented as specified. In conducting testing to meet this objective it is unimportant whether or not the software specifications are those needed by the user. The specifications should be the agreed upon specifications for the software system.

The system specifications tend to focus on the software specifications. They rarely address the processing concerns over input to the software, nor do they address the concerns over the ability of user personnel to effectively use the system in performing their day-to-day business activities.

Acceptance testing should focus on input processing, use of the software in the user organization, and whether or not the specifications meet the true processing needs of the user. Sometimes these user needs are not included in the specifications; sometimes these user needs are incorrectly specified in the software specifications; and sometimes the user was unaware that without certain attributes of the system, the system was not acceptable to the user. Examples include users not specifying the skill level of the people who will be using the system; processing may be specified but turnaround time not specified, and the user may not know that they have to specify the maintainability attributes of the software.

Effective software testers will focus on all three reasons why the software specified may not meet the user’s true needs. For example they may recommend developmental reviews with users involved. Testers may ask users if the quality factors are important to them in the operational software. Testers may work with users to define acceptance criteria early in a development process so that the developers are aware and can address those acceptance criteria.

Tags: User Acceptance Testing, Software Testing, Quality Assurance

Methodology of assigning Acceptance Criteria by the users?

The user must assign the criteria the software must meet to be deemed acceptable. Ideally, this is included in the software requirement specifications.

In preparation for developing the acceptance criteria, the user should:

1) Acquire full knowledge of the application for which the system is intended

2) Become fully acquainted with the application as it is currently implemented by the user’s organization

3) Understand the risks and benefits of the development methodology that is to be used in correcting the software system

4) Fully understand the consequences of adding new functions to enhance the system.

Acceptance requirements that a system must meet can be divided into following four categories:

1) Functionality Requirements: These requirements relate to the business rules that the system must execute.

2) Performance Requirements: These requirements relate to operational aspects, such as time or resource constraints.

3) Interface Quality Requirements: These requirements relate to connections from one component to another component of processing (e.g., human-machine, machine-module).

4) Overall Software Quality Requirements: These requirements specify limits for factors or attributes such as reliability, testability, correctness, and usability.

The criterion that a requirements document may have no more than five statements with missing information is an example of quantifying the quality factor of completeness. Assessing the criticality of a system is important in determining quantitative acceptance criteria. The user should determine the degree of criticality of the requirements by the above acceptance requirements categories.

By definition, all safety criteria are critical; and by law, certain security requirements are critical.

Some typical factors affecting criticality are:

a) Importance of the system to organization or industry

b) Consequence of failure

c) Complexity of the project

d) Technology risk

e) Complexity of the user environment

Products or pieces of products with critical requirements do not qualify for acceptance if they do not satisfy their acceptance criteria. A product with failed non-critical requirements may qualify for acceptance, depending upon quantitative acceptance criteria for quality factors. Clearly, if a product fails a substantial number of non-critical requirements, the quality of the product is questionable.

The user has the responsibility of ensuring that acceptance criteria contain pass or fail criteria. The acceptance tester should approach testing assuming that the least acceptable corrections have been made; while the developer believes the corrected system is fully acceptable. Similarly, a contract with what could be interpreted as a range of acceptable values could result in a corrected system that might never satisfy the user’s interpretation of the acceptance criteria.

For specific software systems, users must examine their projects’ characteristics and criticality in order to develop expanded lists of acceptance criteria for those software systems. Some of the criteria may change according to the phase of correction for which criteria are being defined. For example, for requirements, the “testability” quality may mean that test cases can be developed automatically.

The user must also establish acceptance criteria for individual elements of a product. These criteria should be the acceptable numeric values or ranges of values. The buyer should compare the established acceptable values against the number of problems presented at acceptance time. For example, if the number of inconsistent requirements exceeds the acceptance criteria, then the requirements document should be rejected. At that time, the established procedures for iteration and change control go into effect.

Acceptance Criteria related Information Required to be Documented by the users:

It should be prepared for each hardware or software project within the overall project, where the acceptance criteria requirements should be listed and uniquely numbered for control purposes.

Criteria - 1: Hardware / Software Project:

Information to be documented: The name of the project being acceptance-tested. This is the name the user or customer calls the project.

Criteria - 2: Number:

Information to be documented: A sequential number identifying acceptance criteria.

Criteria - 3: Acceptance Requirement:

Information to be documented: A user requirement that will be used to determine whether the corrected hardware/software is acceptable.

Criteria - 4: Critical / Non-Critical:

Information to be documented: Indicate whether the acceptance requirement is critical, meaning that it must be met, or non-critical, meaning that it is desirable but not essential.

Criteria - 5: Test Result:

Information to be documented: Indicates after acceptance testing whether the requirement is acceptable or not acceptable, meaning that the project is rejected because it does not meet the requirement.

Criteria - 6: Comments:

Information to be documented: Clarify the criticality of the requirement; or indicate the meaning of the test result rejection. For example: The software cannot be run; or management will make a judgment after acceptance testing as to whether the project can be run.

After defining the acceptance criteria, determine whether meeting the criteria is critical to the success of the system.

Tags: User Acceptance Testing, Software Testing, Quality Assurance

In preparation for developing the acceptance criteria, the user should:

1) Acquire full knowledge of the application for which the system is intended

2) Become fully acquainted with the application as it is currently implemented by the user’s organization

3) Understand the risks and benefits of the development methodology that is to be used in correcting the software system

4) Fully understand the consequences of adding new functions to enhance the system.

Acceptance requirements that a system must meet can be divided into following four categories:

1) Functionality Requirements: These requirements relate to the business rules that the system must execute.

2) Performance Requirements: These requirements relate to operational aspects, such as time or resource constraints.

3) Interface Quality Requirements: These requirements relate to connections from one component to another component of processing (e.g., human-machine, machine-module).

4) Overall Software Quality Requirements: These requirements specify limits for factors or attributes such as reliability, testability, correctness, and usability.

The criterion that a requirements document may have no more than five statements with missing information is an example of quantifying the quality factor of completeness. Assessing the criticality of a system is important in determining quantitative acceptance criteria. The user should determine the degree of criticality of the requirements by the above acceptance requirements categories.

By definition, all safety criteria are critical; and by law, certain security requirements are critical.

Some typical factors affecting criticality are:

a) Importance of the system to organization or industry

b) Consequence of failure

c) Complexity of the project

d) Technology risk

e) Complexity of the user environment

Products or pieces of products with critical requirements do not qualify for acceptance if they do not satisfy their acceptance criteria. A product with failed non-critical requirements may qualify for acceptance, depending upon quantitative acceptance criteria for quality factors. Clearly, if a product fails a substantial number of non-critical requirements, the quality of the product is questionable.

The user has the responsibility of ensuring that acceptance criteria contain pass or fail criteria. The acceptance tester should approach testing assuming that the least acceptable corrections have been made; while the developer believes the corrected system is fully acceptable. Similarly, a contract with what could be interpreted as a range of acceptable values could result in a corrected system that might never satisfy the user’s interpretation of the acceptance criteria.

For specific software systems, users must examine their projects’ characteristics and criticality in order to develop expanded lists of acceptance criteria for those software systems. Some of the criteria may change according to the phase of correction for which criteria are being defined. For example, for requirements, the “testability” quality may mean that test cases can be developed automatically.

The user must also establish acceptance criteria for individual elements of a product. These criteria should be the acceptable numeric values or ranges of values. The buyer should compare the established acceptable values against the number of problems presented at acceptance time. For example, if the number of inconsistent requirements exceeds the acceptance criteria, then the requirements document should be rejected. At that time, the established procedures for iteration and change control go into effect.

Acceptance Criteria related Information Required to be Documented by the users:

It should be prepared for each hardware or software project within the overall project, where the acceptance criteria requirements should be listed and uniquely numbered for control purposes.

Criteria - 1: Hardware / Software Project:

Information to be documented: The name of the project being acceptance-tested. This is the name the user or customer calls the project.

Criteria - 2: Number:

Information to be documented: A sequential number identifying acceptance criteria.

Criteria - 3: Acceptance Requirement:

Information to be documented: A user requirement that will be used to determine whether the corrected hardware/software is acceptable.

Criteria - 4: Critical / Non-Critical:

Information to be documented: Indicate whether the acceptance requirement is critical, meaning that it must be met, or non-critical, meaning that it is desirable but not essential.

Criteria - 5: Test Result:

Information to be documented: Indicates after acceptance testing whether the requirement is acceptable or not acceptable, meaning that the project is rejected because it does not meet the requirement.

Criteria - 6: Comments:

Information to be documented: Clarify the criticality of the requirement; or indicate the meaning of the test result rejection. For example: The software cannot be run; or management will make a judgment after acceptance testing as to whether the project can be run.

After defining the acceptance criteria, determine whether meeting the criteria is critical to the success of the system.

Tags: User Acceptance Testing, Software Testing, Quality Assurance

What is the role of Software Testers in acceptance testing?

Software testers can have one of three roles in acceptance testing.

Role - 1) No involvement at all:

In that instance the user accepts full responsibility for developing and executing the acceptance test plan.

Role - 2) Act as an advisor:

The user will develop and execute the test plan, but rely on software testers to compensate for a lack of competency on the part of the users, or to provide a quality control role.

Role - 3) Be an active participant in software testing:

This role can include any or the entire acceptance testing activities. The role of the software tester cannot include defining the acceptance criteria, or making the decision as to whether or not the software can be placed into operation. If software testers are active participants in acceptance testing, then they may conduct any part of acceptance testing up to the point where the results of acceptance testing are documented.

A role that software testers should accept is developing the acceptance test process. This means that they will develop a process for defining acceptance criteria, develop a process for building an acceptance test plan, develop a process to execute the acceptance test plan, and develop a process for recording and presenting the results of acceptance testing.

Tags: User Acceptance Testing, Software Testing, Quality Assurance

Role - 1) No involvement at all:

In that instance the user accepts full responsibility for developing and executing the acceptance test plan.

Role - 2) Act as an advisor:

The user will develop and execute the test plan, but rely on software testers to compensate for a lack of competency on the part of the users, or to provide a quality control role.

Role - 3) Be an active participant in software testing:

This role can include any or the entire acceptance testing activities. The role of the software tester cannot include defining the acceptance criteria, or making the decision as to whether or not the software can be placed into operation. If software testers are active participants in acceptance testing, then they may conduct any part of acceptance testing up to the point where the results of acceptance testing are documented.

A role that software testers should accept is developing the acceptance test process. This means that they will develop a process for defining acceptance criteria, develop a process for building an acceptance test plan, develop a process to execute the acceptance test plan, and develop a process for recording and presenting the results of acceptance testing.

Tags: User Acceptance Testing, Software Testing, Quality Assurance

What is the role of the Users in acceptance testing?

The user’s role in acceptance testing begins with the user making the determination as to whether acceptance testing will or will not occur. If the totality of user’s needs have been incorporated into the software requirements, then the software testers should test to assure those needs are met in unit, integration, and system testing.

If acceptance testing is to occur the user has primary responsibility for planning and conducting acceptance testing. This assumes that the users have the necessary testing competency to develop and execute an acceptance test plan.

If the user does not have the needed competency to develop and execute an acceptance test plan the user will need to acquire that competency from other organizational units or out source the activity. Normally, the IT organization’s software testers would assist the user in the acceptance testing process if additional competency is needed.

The users will have the following minimum roles in acceptance testing:

1) Defining acceptance criteria in a testable format

2) Providing the use cases that will be used in acceptance testing

3) Training user personnel in using the new software system

4) Providing the necessary resources, primarily user staff personnel, for acceptance testing

5) Comparing the actual acceptance testing results with the desired acceptance testing results. This may be performed using testing software.

6) Making decisions as to whether additional work is needed prior to placing the software in operation, whether the software can be placed in operation with additional work to be done, or whether the software is fully acceptable and can be placed into production as is

If the software does not fully meet the user needs, but will be placed into operation, the user must develop a strategy to anticipate problems and pre-define the actions to be taken should those problems occur.

Tags: User Acceptance Testing, Software Testing, Quality Assurance

If acceptance testing is to occur the user has primary responsibility for planning and conducting acceptance testing. This assumes that the users have the necessary testing competency to develop and execute an acceptance test plan.

If the user does not have the needed competency to develop and execute an acceptance test plan the user will need to acquire that competency from other organizational units or out source the activity. Normally, the IT organization’s software testers would assist the user in the acceptance testing process if additional competency is needed.

The users will have the following minimum roles in acceptance testing:

1) Defining acceptance criteria in a testable format

2) Providing the use cases that will be used in acceptance testing

3) Training user personnel in using the new software system

4) Providing the necessary resources, primarily user staff personnel, for acceptance testing

5) Comparing the actual acceptance testing results with the desired acceptance testing results. This may be performed using testing software.

6) Making decisions as to whether additional work is needed prior to placing the software in operation, whether the software can be placed in operation with additional work to be done, or whether the software is fully acceptable and can be placed into production as is

If the software does not fully meet the user needs, but will be placed into operation, the user must develop a strategy to anticipate problems and pre-define the actions to be taken should those problems occur.

Tags: User Acceptance Testing, Software Testing, Quality Assurance

Friday, June 5, 2009

Understanding of the objective of User Acceptance Testing

Let us begin with the understanding of the objective of User Acceptance Testing :

The objective of software development is to develop the software that meets the true needs of the user, not just the system specifications. To accomplish this, testers should work with the users early in a project to clearly define the criteria that would make the software acceptable in meeting the user needs. As much as possible, once the acceptance criterion has been established, they should integrate those criteria into all aspects of development. This same process can be used by software testers when users are unavailable for test; when diverse users use the same software; and for beta testing software.

Although acceptance testing is a customer and user responsibility, testers normally help develop an acceptance test plan, include that plan in the system test plan to avoid test duplication; and, in many cases, perform or assist in performing the acceptance test.

What are the key Concepts of Acceptance Testing?

It is important that both software testers and user personnel understand the basics of acceptance testing.

Acceptance testing is formal testing conducted to determine whether a software system satisfies its acceptance criteria and to enable the buyer to determine whether to accept the system. Software acceptance testing at delivery is usually the final opportunity for the buyer to examine the software and to seek redress from the developer for insufficient or incorrect software.

Frequently, the software acceptance test is the only time the buyer is involved in acceptance and the only opportunity the buyer has to identify deficiencies in a critical software system. The term critical implies economic or social catastrophe, such as loss of life; it implies the strategic importance to an organization’s long-term economic welfare. The buyer is thus exposed to the considerable risk that a needed system will never operate reliably because of inadequate quality control during development. To reduce the risk of problems arising at delivery or during operation, the buyer must become involved with software acceptance early i the acquisition process.

Software acceptance is an incremental process of approving or rejecting software systems during development or maintenance, according to how well the software satisfies predefined criteria. For the purpose of software acceptance, the activities of software maintenance are assumed to share the properties of software development.

Acceptance decisions occur at pre-specified times when processes, support tools, interim documentation, segments of the software, and finally the total software system must meet predefined criteria for acceptance. Subsequent changes to the software may affect previously accepted elements. The final acceptance decision occurs with verification that the delivered documentation is adequate and consistent with the executable system and that the complete software system meets all buyer requirements. This decision is usually based on software acceptance testing.

Formal final software acceptance testing must occur at the end of the development process. It consists of tests to determine whether the developed system meets predetermined functionality, performance, quality, and interface criteria. Criteria for security or safety may be mandated legally or by the nature of the system.

Acceptance testing involves procedures for identifying acceptance criteria for interim life cycle products and for accepting them. Final acceptance not only acknowledges that the entire software product adequately meets the buyer’s requirements, but also acknowledges that the process of development was adequate.

The objective of software development is to develop the software that meets the true needs of the user, not just the system specifications. To accomplish this, testers should work with the users early in a project to clearly define the criteria that would make the software acceptable in meeting the user needs. As much as possible, once the acceptance criterion has been established, they should integrate those criteria into all aspects of development. This same process can be used by software testers when users are unavailable for test; when diverse users use the same software; and for beta testing software.

Although acceptance testing is a customer and user responsibility, testers normally help develop an acceptance test plan, include that plan in the system test plan to avoid test duplication; and, in many cases, perform or assist in performing the acceptance test.

What are the key Concepts of Acceptance Testing?

It is important that both software testers and user personnel understand the basics of acceptance testing.

Acceptance testing is formal testing conducted to determine whether a software system satisfies its acceptance criteria and to enable the buyer to determine whether to accept the system. Software acceptance testing at delivery is usually the final opportunity for the buyer to examine the software and to seek redress from the developer for insufficient or incorrect software.

Frequently, the software acceptance test is the only time the buyer is involved in acceptance and the only opportunity the buyer has to identify deficiencies in a critical software system. The term critical implies economic or social catastrophe, such as loss of life; it implies the strategic importance to an organization’s long-term economic welfare. The buyer is thus exposed to the considerable risk that a needed system will never operate reliably because of inadequate quality control during development. To reduce the risk of problems arising at delivery or during operation, the buyer must become involved with software acceptance early i the acquisition process.

Software acceptance is an incremental process of approving or rejecting software systems during development or maintenance, according to how well the software satisfies predefined criteria. For the purpose of software acceptance, the activities of software maintenance are assumed to share the properties of software development.

Acceptance decisions occur at pre-specified times when processes, support tools, interim documentation, segments of the software, and finally the total software system must meet predefined criteria for acceptance. Subsequent changes to the software may affect previously accepted elements. The final acceptance decision occurs with verification that the delivered documentation is adequate and consistent with the executable system and that the complete software system meets all buyer requirements. This decision is usually based on software acceptance testing.

Formal final software acceptance testing must occur at the end of the development process. It consists of tests to determine whether the developed system meets predetermined functionality, performance, quality, and interface criteria. Criteria for security or safety may be mandated legally or by the nature of the system.

Acceptance testing involves procedures for identifying acceptance criteria for interim life cycle products and for accepting them. Final acceptance not only acknowledges that the entire software product adequately meets the buyer’s requirements, but also acknowledges that the process of development was adequate.

Tags: User Acceptance Testing, Software Testing, Quality Assurance

What are the benefits of software acceptance Tests as a Life Cycle Process

Let us understand the benefits of software acceptance Tests as As a life cycle process?

A) Early detection of software problems (and time for the customer or user to plan for possible late delivery)

B) Preparation of appropriate test facilities

C) Early consideration of the user’s needs during software development

D) Accountability for software acceptance belongs to the customer or user of the software

What are the responsibilities of customer or end users in acceptance testing?

1) Ensure user involvement in developing system requirements and acceptance criteria

2) Identify interim and final products for acceptance, their acceptance criteria, and schedule

3) Plan how and by whom each acceptance activity will be performed

4) Plan resources for providing information on which to base acceptance decisions

5) Schedule adequate time for buyer staff to receive and examine products and evaluations prior to acceptance review

6) Prepare the Acceptance Plan

7) Respond to the analyses of project entities before accepting or rejecting

8) Approve the various interim software products against quantified criteria at interim points

9) Perform the final acceptance activities, including formal acceptance testing, at delivery

10) Make an acceptance decision for each product

The customer or user must be actively involved in defining the type of information required, evaluating that information, and deciding at various points in the development activities if the products are ready for progression to the next activity.

Acceptance testing is designed to determine whether the software is fit for use. The concept of fit for use is important in both design and testing. Design must attempt to build the application to fit into the user’s business process; the test process must ensure a prescribed degree of fit. Testing that concentrates on structure and requirements may fail to assess fit, and thus fail to test the value of the automated application to the business.

What are the components of fit?

1) Data: The reliability, timeliness, consistency, and usefulness of the data included in the automated application.

2) People: People should have the skills, training, aptitude, and desire to properly use and interact with the automated application.

3) Structure: The structure is the proper development of application systems to optimize technology and satisfy requirements.

4) Rules: The rules are the procedures to follow in processing the data.

Tags: Software Testing, User Acceptance Testing

A) Early detection of software problems (and time for the customer or user to plan for possible late delivery)

B) Preparation of appropriate test facilities

C) Early consideration of the user’s needs during software development

D) Accountability for software acceptance belongs to the customer or user of the software

What are the responsibilities of customer or end users in acceptance testing?

1) Ensure user involvement in developing system requirements and acceptance criteria

2) Identify interim and final products for acceptance, their acceptance criteria, and schedule

3) Plan how and by whom each acceptance activity will be performed

4) Plan resources for providing information on which to base acceptance decisions

5) Schedule adequate time for buyer staff to receive and examine products and evaluations prior to acceptance review

6) Prepare the Acceptance Plan